The 'YOLO RUN' and a Billion-Dollar Vision

Replit’s Reveal, Extending LLM Tokens, Chat with ANY image + more!

Greetings everyone,

👋 Deiniol here - founder of AI Suite.

Here is your weekly dose of AI broken down into 3 highlights, 2 tools and 1 demo/tutorial.

Hope you enjoy 🦘

We are honoured to partner with:

NewArt explores philosophical, psychological, ethical and social questions that emerge from Generative AI, and reflects on the challenges and opportunities of having disruptive technology as an assistant. I read every publication. Don’t miss it, join the conversation now!

“I am certain we will discover new properties which are currently unknown (in) Deep Learning and I fully expect that the systems in 5-10 years will be much much better than the ones we have now.”

- Ilya Sutskever (OpenAI)

1 - Replit’s Reveal

A LLaMa-style LLM to Revolutionise Code Completion and $1.16B

Introducing Replit's latest creation, 'replit-code-v1-3b,'. An open-sourced, LLaMa-style LLM that's about to flip the coding world on its head. Trained on 525B tokens of code, this bad boy outperforms comparable models by a smooth 40%.

Replit's not stopping there, folks. They've transformed their entire IDE into a slick set of "tools" for an autonomous agent. Tell it your wildest programming dreams, and watch the magic happen. Example: draft a REPL, write an app, and deploy it. Oh, and did we mention it was trained in a mere 10 days? They didn't call it the "YOLO RUN" for nothing.

Replit-code-v1-3b's got a knack for non-coding reasoning, too. Despite being trained entirely on code, it performs incredibly well when benchmarked against models trained for reasoning tasks. They’re also aiming to scale this 2.7B parameter masterpiece up to a jaw-dropping 7B. Hello, dev community.

As if that's not enough, Replit's got an extra $97.4M tucked in their pocket from a Series B extension, bumping their valuation to $1.16B. They're ready to expand their cloud services and dominate AI for software creation. So hold onto your keyboards, because Replit's on a mission to empower a billion software developers — and they're one giant leap closer!

2 - Extending LLM tokens:

The viral paper that's stretching AI memory to new lengths

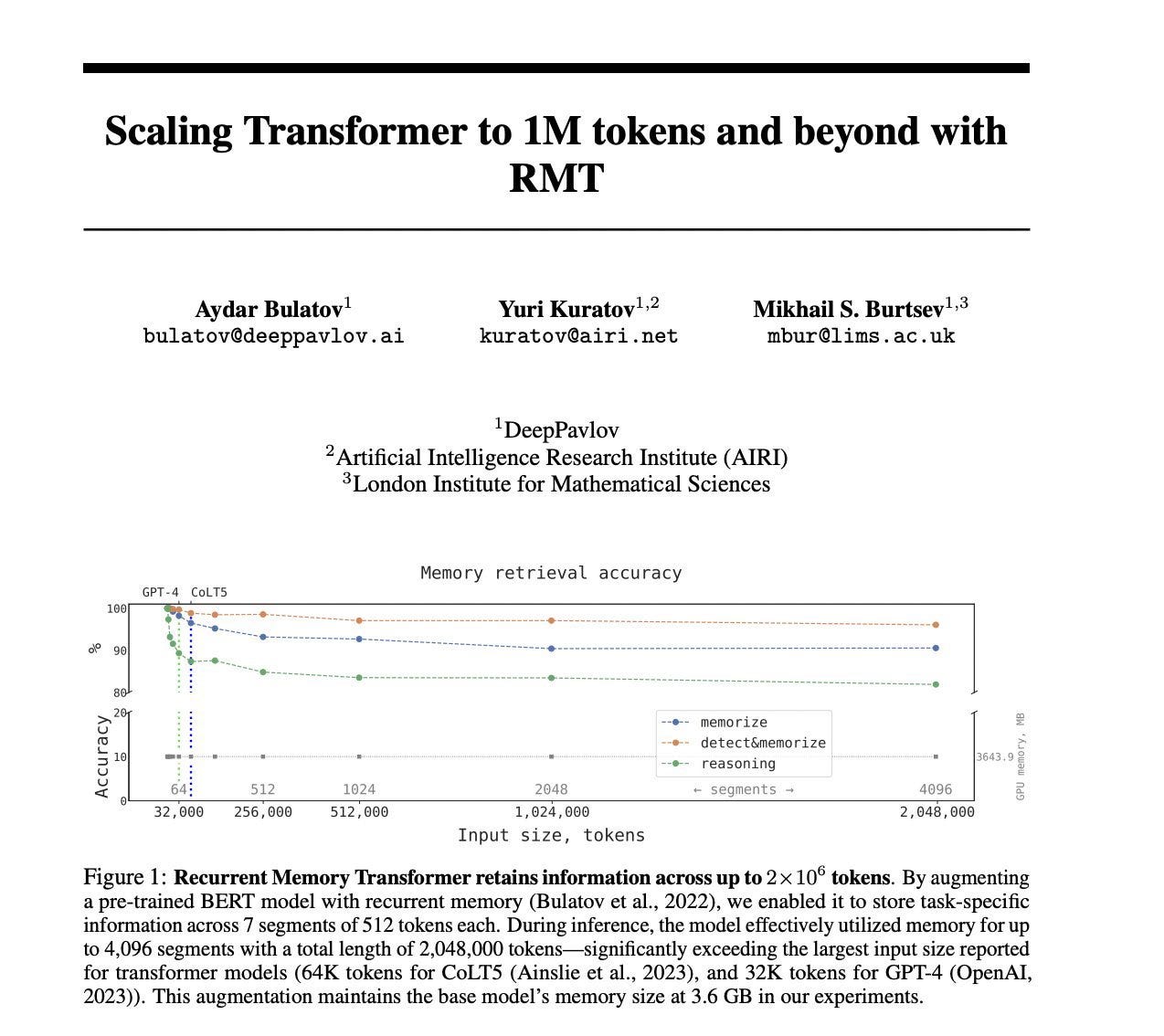

Remember when GPT-4 wowed us with its ability to process 32,000 tokens (~50 pages of documents)? Well, buckle up buttercup, because the Recurrent Memory Transformer is about to blow your digital socks off.

This tech marvel can retain information across a whopping 2 million tokens. To put that into perspective, the entire Harry Potter series is around 1.5 million tokens. Insert dramatic gasp here.

What can we expect from this supercharged memory maestro?

Legal aid: Speeding up legal document analysis for lawyers, saving time and resources.

History detective: Unveiling hidden connections in massive historical records, enlightening our past.

Medical sidekick: Crafting personalised treatment plans by analysing extensive medical data and tailoring healthcare.

Climate crusader: Tackling climate change with in-depth data analysis, guiding eco-friendly policies.

The Recurrent Memory Transformer is pushing the boundaries of BERT, one of the most effective models in natural language processing. The results? Improved long-term dependency handling and large-scale context processing for those memory-hungry applications.

Let the AI revolution continue, but maybe grab some popcorn first. This is about to get interesting. GitHub repo here.

3 - Hugging Face’s Chat:

Open-sourcing ChatGPT (kinda)

Scooch over, ChatGPT, because there's a new, open-source alternative in town: HuggingChat.

Born from the creative minds of Hugging Face, a startup with millions in venture capital, HuggingChat is ready for action through its web interface and API integration.

The brain behind the bot comes from Open Assistant, a project by LAION, the German nonprofit that trained Stable Diffusion. Like all text-generating models, HuggingChat has its hiccups. It's prone to the standard course of hallucination but hey, it won't indulge in toxic or illegal requests, so that's a win.

HuggingChat is the latest member of a growing family of open-source alternatives to ChatGPT, like Stability AI's StableLM.

The open-source revolution isn't slowing down – embrace the unpredictable world of AI chatbots!

⚙️ Tool 1

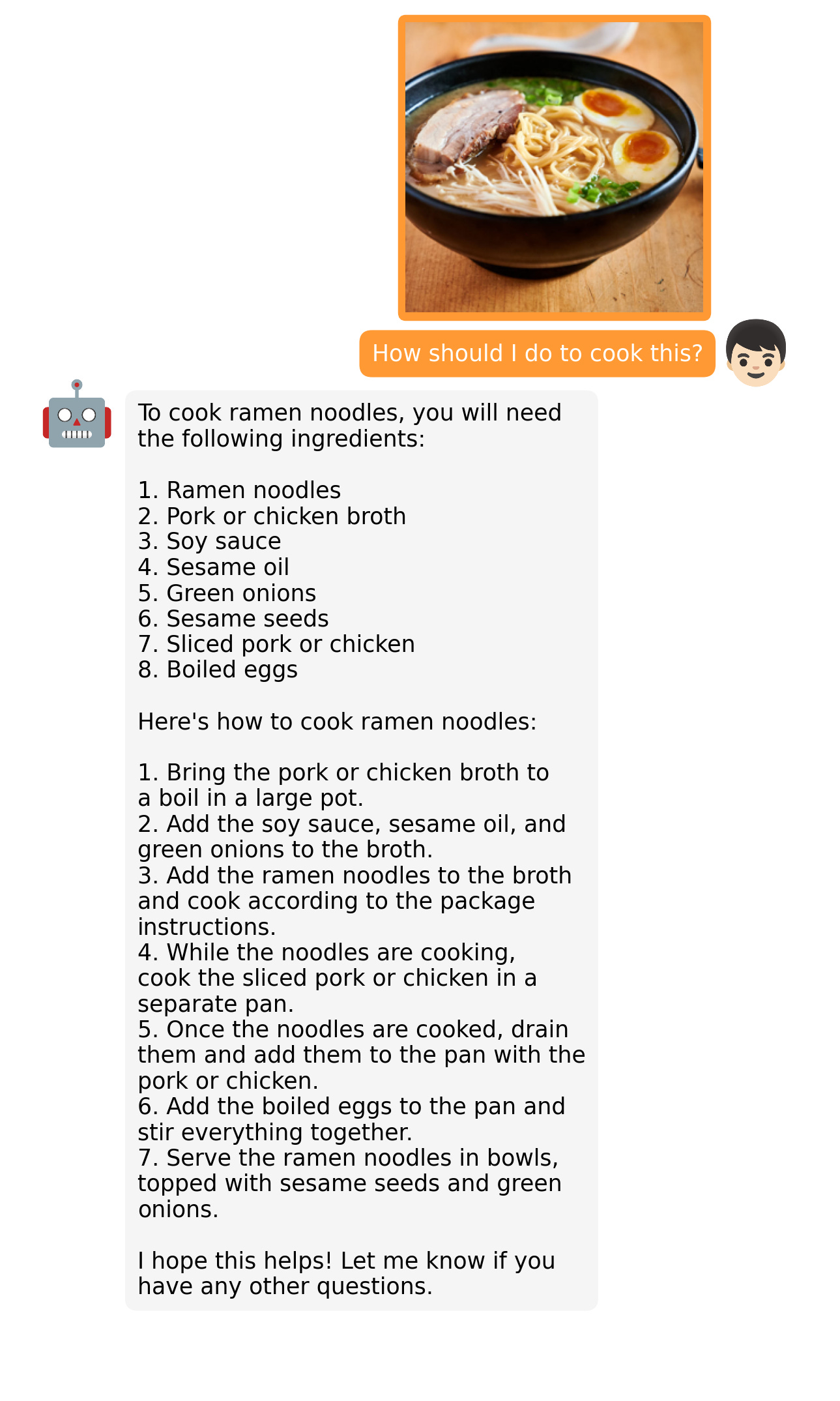

MiniGPT-4 | Chat with ANY imageTired of waiting to take advantage of GPT-4’s multimodal abilities? I was too. Until I met MiniGPT-4.

GPT-4 is leaving its peers in the dust, pioneering uncharted territory with its multi-modal capabilities. From sketching websites straight from handwritten text to spotting the punchline in a picture, MiniGPT-4 is seemingly capable of everything Greg Brockman demonstrated in the GPT-4 launch. Leaving other vision-language models green with envy.

🦾 MiniGPT-4 flexes its muscles by:

✅ Creating websites from scribbles

✅ Crafting detailed image descriptions

✅ Penning poetry inspired by visuals

✅ Whipping up recipes from food snaps

And much more. The truly exciting thing about this is the unknown use cases we will soon discover. Behold, the future of AI is here, and it's dazzling.

Got a potential use case for this? Let me know on Twitter or Substack Notes.

⚙️ Tool 2

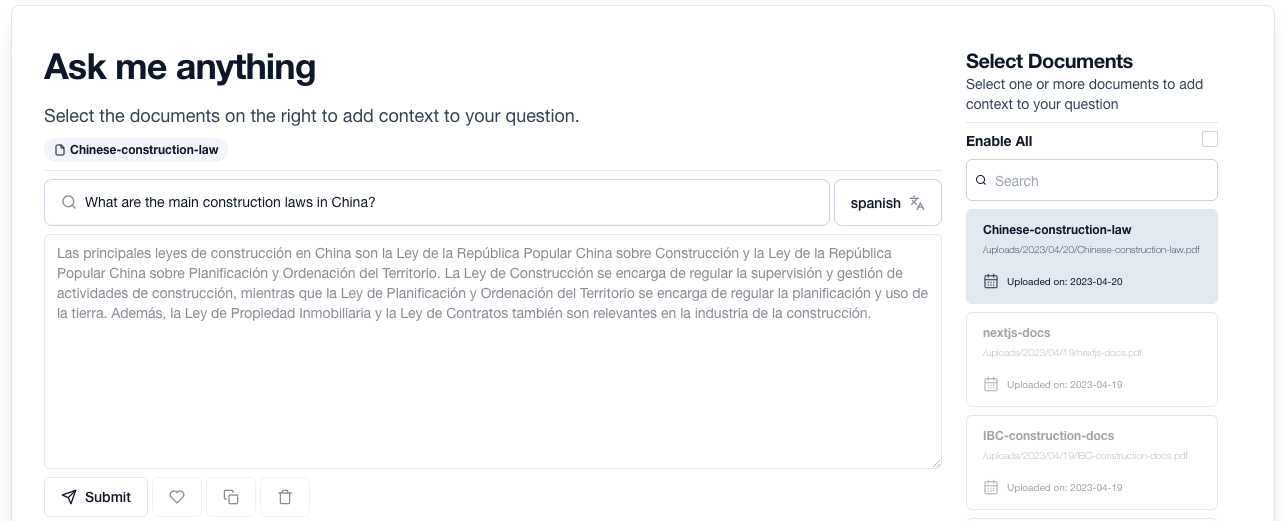

DocuAsk | Ask about YOUR documents in any languageDocuAsk is a brand new product that allows you to upload documents, ask questions, compare documents and get answers in any language. It’s pretty self-explanatory and solves a simple problem so I won’t go into much detail.

It’s a tool that helps you unlock global understanding by providing multilingual document queries and precise answers in your language.

🪜 Ie. 1,2,3,4

📚 Upload documents

❓ Ask questions

🔎 Compare documents

🙌 Get answers

🌏 All in any language 🌎

Powered by: LangChainAI, pinecone and OpenAI

⚠️ Limitations and risks to consider:

→ Information on their privacy and data regulation is scarce. Use at your own discretion.

🧑🏫 Demo/Tutorial

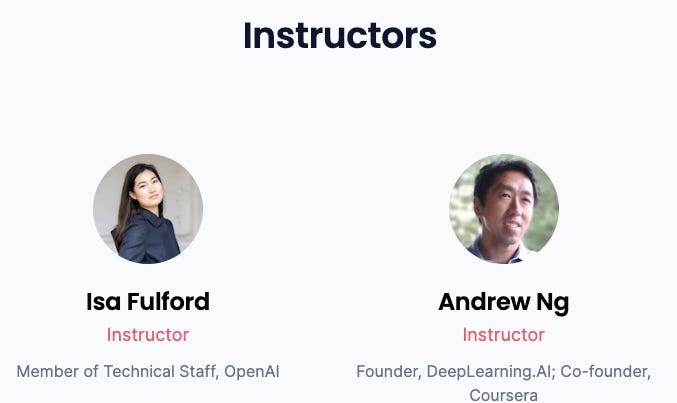

ChatGPT Prompt Engineering for Developers | Andrew Ng & Isabella FulfordDiscover the wizardry of LLMs in ChatGPT Prompt Engineering for Developers.

Ever wondered how to harness the power of Large Language Models (LLMs) to build futuristic applications? Don your coding capes and join Isa Fulford (OpenAI) and Andrew Ng (DeepLearning.AI) for a magical ride through the ChatGPT Prompt Engineering for Developers course.

This bite-sized course will conjure up LLM secrets, whip up prompt engineering best practices, and reveal how to use LLM APIs for an enchanting array of tasks:

Shortening spells (e.g. shrinking user reviews to their core)

Divining wisdom (e.g. sentiment classification, topic extraction)

Text transmutations (e.g. translation, spelling & grammar sorcery)

Expanding enchantments (e.g. conjuring email drafts on command)

You'll learn to craft the perfect incantation (i.e., effective prompts), master the art of prompt engineering, and even construct your very own chatbot familiar.

🧙♂️ Are you the chosen one?

Fear not, young apprentices — ChatGPT Prompt Engineering for Developers is beginner-friendly. All you need is a touch of Python knowledge. And fret not, seasoned machine learning wizards! This course is an elixir for those seeking to push the boundaries of prompt engineering and wield LLMs with finesse.

If you want some help learning Python fundamentals, reply to this email and I’ll point you in the right direction.

If you found value in this week’s newsletter, please support us by sharing with your closest and liking our publication at the bottom. It helps a lot 🤗

Until next week, earthlings, bots and cyborgs, code out!

Deiniol 🇦🇺

Something exclusively for you guys:

Join the AI-integrated search engine, Neeva today for one month of their premium plan, totally free.

🏆 We got codesnippets.ai 28 new subscribers in just a few hours:

Get your business, podcast or AI Art in front of thousands of email subscribers, Twitter followers and AI enthusiasts, startup founders, aspiring entrepreneurs and artificial intelligence students who visit our website frequently.